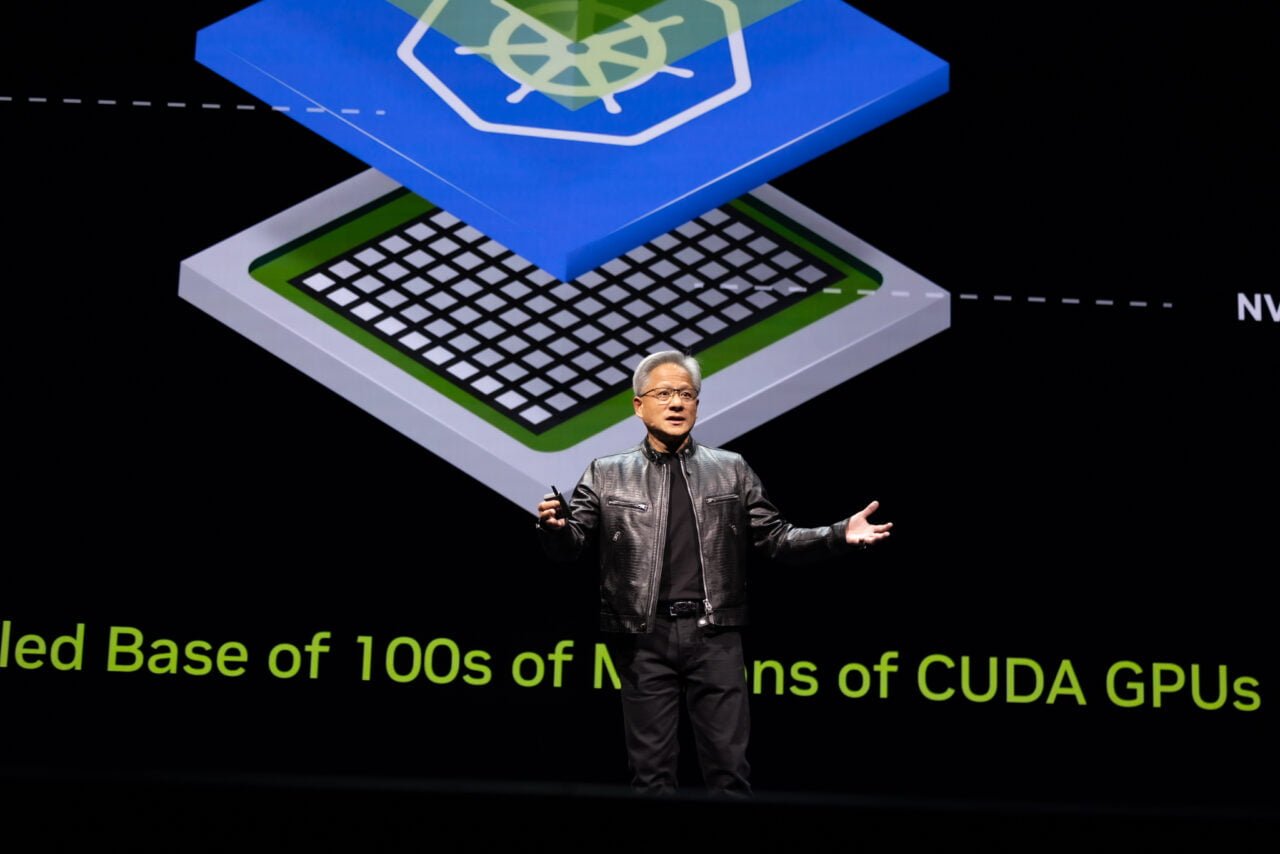

At the COMPUTEX keynote, NVIDIA founder and CEO Jensen Huang revealed several significant innovations poised to redefine data centers and enterprise operations, propelling the tech industry into a new era of AI-driven advancements.

Leading Computer Manufacturers Join NVIDIA to Build AI Factories and Data Centers

NVIDIA, in collaboration with top global computer manufacturers, introduced a suite of new systems powered by the cutting-edge NVIDIA Blackwell architecture, featuring Grace CPUs and advanced networking solutions. This initiative is designed to enable enterprises to construct AI factories and data centers, driving the next wave of generative AI breakthroughs. Notable partners such as ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, Pegatron, QCT, Supermicro, Wistron, and Wiwynn will deliver AI systems for various applications including cloud, on-premises, embedded, and edge using NVIDIA GPUs and networking solutions (NVIDIA) (NVIDIA).

These systems are expected to streamline AI operations, enhancing both performance and efficiency in data processing and manufacturing. The collaboration underscores a unified effort to advance AI-driven manufacturing and data processing capabilities, which are critical for the next industrial revolution.

NVIDIA Enhances Ethernet Networking for Generative AI

In a move to bolster AI infrastructures, NVIDIA announced the widespread adoption of its Spectrum-X Ethernet networking platform. This platform is designed to provide exceptional networking performance, crucial for supporting advanced AI workloads. Early adopters of the Spectrum-X platform include AI cloud service providers such as CoreWeave, GMO Internet Group, Lambda, Scaleway, STPX Global, and Yotta. These providers are leveraging Spectrum-X to enhance their AI capabilities significantly (NVIDIA) (NVIDIA).

Furthermore, several industry giants including ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, Pegatron, QCT, Wistron, and Wiwynn have integrated Spectrum-X into their product offerings. This integration promises to deliver unprecedented networking performance, crucial for the demanding needs of generative AI applications.

NVIDIA NIM Transforms Model Deployment for Developers

NVIDIA has also introduced NVIDIA NIM, a revolutionary suite of inference microservices providing optimized model containers for deployment across clouds, data centers, or workstations. This innovation is set to transform the capabilities of the world’s 28 million developers, enabling them to build generative AI applications such as copilots and chatbots in minutes rather than weeks (NVIDIA) (NVIDIA).

NVIDIA NIM simplifies the deployment of complex AI models, which often involve multi-model systems for generating text, images, video, and speech. This platform allows developers to quickly integrate generative AI into their applications, enhancing productivity and maximizing the return on infrastructure investments.

Impact on Industries and Future Prospects

These groundbreaking announcements highlight NVIDIA’s commitment to driving AI technology forward, setting the stage for significant advancements across multiple industries. By partnering with leading computer manufacturers and cloud service providers, NVIDIA is ensuring that its cutting-edge AI solutions are widely accessible and capable of meeting the high demands of modern data processing and manufacturing environments.

The introduction of NVIDIA NIM is particularly noteworthy as it democratizes access to sophisticated AI tools, empowering millions of developers worldwide. This accessibility is expected to spur innovation, leading to the development of more advanced AI applications that can transform various sectors including healthcare, finance, manufacturing, and more.

Looking Ahead

As NVIDIA continues to push the boundaries of AI technology, the future of data centers and enterprise operations looks promising. With robust networking solutions, powerful AI systems, and accessible deployment tools, NVIDIA is well-positioned to lead the industry into a new era of AI-driven innovation.